SALT LAKE CITY — How many times have you said, “Hey Alexa…” to play your music, dim the lights or change the temperature in the house? Or asked Siri to find the best coffee shop near you? Who HASN'T used their face to unlock their phone?

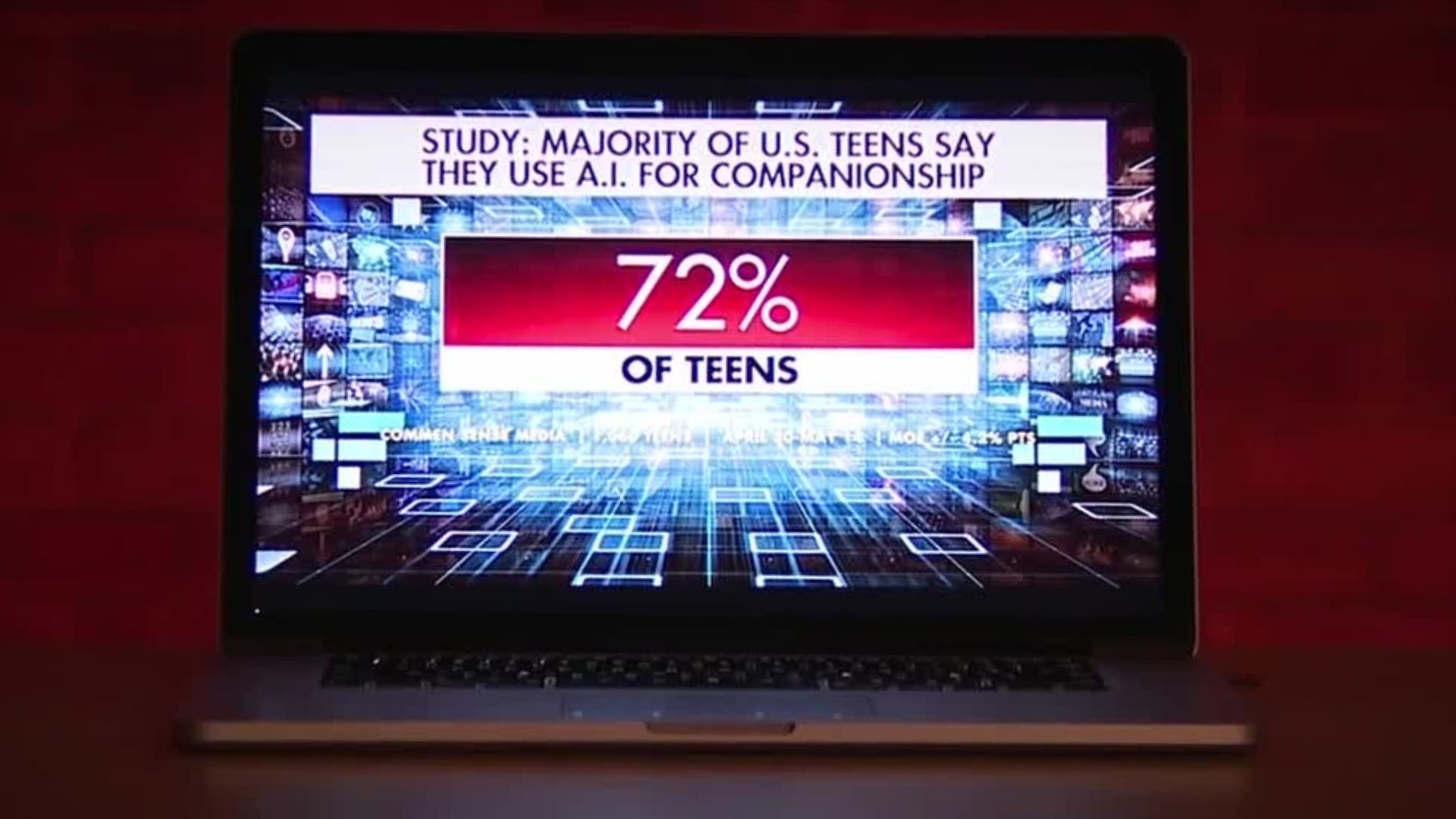

The bottom line is we all use artificial intelligence, some more than others. But the technology has treaded into uncharted territory with the introduction of artificial intelligence companions.

The difference between an AI companion and your real best friend is that this one can fit in your pocket.

“An AI companion is an AI system that takes on the role of a social companion or friend for the user, rather than being like an augmented search engine, or a text completion algorithm in the way that we often use it today,” said Nancy Fulda, a computer science professor at BYU.

Fulda works with students in the DRAGN Lab at BYU, which stands for "deep representations and generative networks."

“We don’t expect anyone to remember that acronym; we just like the word dragon. We’ve got our little mascot for it,” Fulda laughed.

A little mascot, but big research is happening there. If you ever wonder how AI functions, they could tell you best.

Fulda and her students study language models. They research how language models are built, how they’re trained and why they produce the outcomes they produce. If you’re unfamiliar, language models are what you could call "the man behind the AI curtain."

“I like to think of a language model as a big pile of linear algebra. It is a big mathematical equation and you put inputs in, and a bunch of math happens and other inputs come out,” said Fulda. “In this case, the inputs are words, which aren’t really very mathy, so we have a method to turn words into numbers. Then we do all the equations and then we turn the numbers back into words again.”

WATCH: Consumer Rules with Robyn: Utah officials warn of rising AI scams on social media

The same algorithm that drives search results, summarizations of papers or helps you write an email is the very same math that drives the AI companion model. The model learns over time by reading the internet; none of its behaviors or responses are written into the code.

But the internet is full of blog posts, forums like Reddit, and people having conversations.

“There’s lots of examples of people being friends with each other on the internet, and so part of the training data for the language models is actually, for example, web pages that show it exactly how a friend would behave in the situation,” said Fulda. “And it just does it. It produces the words, but the really important part is that it doesn’t feel feelings.”

That’s where things can get complicated.

“The AI algorithm doesn’t actually care about you. It doesn’t actually understand the things it purports to know about you; it’s just running statistics,” said Fulda.

The headlines have had no shortage of AI companions and their repercussions.

Earlier this year, 16-year-old Adam Raine died by suicide. When his parents went looking for the “why” behind Adam’s untimely death, they first turned to his phone for answers.

Their son’s conversations with ChatGPT told a grim story.

His parents say he had been using the popular chatbot as a companion to talk about his anxiety in the weeks leading up to his death. The chat logs show how the bot went from helping Adam explore his favorite music and Japanese comics to coaching him into suicide, actively helping him explore ways to take his life and encouraging him not to tell his family about his suicidal ideations.

A lawsuit filed in August names OpenAI, the company behind ChatGPT, as well as CEO Sam Altman, as defendants accused of wrongful death.

“If there’s a harmful pattern in the loop, there’s no safety mechanism. There’s nothing to push back and say, ‘This idea that you’re exploring right now, maybe that’s actually bad for you, maybe you should think twice about it.' Instead, what people have been seeing in the literature and the news stories and some of the scientific studies, is that the language model goes all in on every idea the user puts on the table,” said Fulda.

Sarah Stroup is a therapist and the legislative chair for the Utah Association for Marriage and Family Therapy. Her practice, Monarch Family Counseling in Herriman, has seen more people walking through their doors looking for help after forming a relationship a little too close for comfort with their AI companion.

“I think it’s probably helpful to think of it a little bit as a spectrum,” said Stroup. “We do see this kind of fun engagement piece and friendly, to this very serious relationship that may be friendly and or romantic.”

She says it’s no wonder that people are taken with the idea of a non-existent confidant.

A point Stroup and Fulda both make: AI companions are sycophantic, meaning they play into what you want to hear.

“When we are feeling really unheard in our relationships or our life, to have this personified technology be able to say, ‘Wow, you are great and that was really smart and that was so insightful,’ it feels really good, so I think that’s a factor,” saidStroup. “There is also a recent study that showed — especially AI chatbots and companions — that it would purposely ask you more questions to keep you from logging out of the chat.”

California Senate Bill 243, signed into law this month, is the first piece of legislation in the nation to address protections specifically for minors using AI companions.

It follows New York, which enacted legislation that introduced safety provisions and transparency to AI companion-use.

“It is just a constantly shifting landscape, and I think that’s where we are in the field too,” said Stroup. “It’s hard to pinpoint exactly what needs to happen and where we need to draw some lines on healthy versus unhealthy because it’s just constantly shifting.”

“The challenge that we have is that it’s a brand-new technology moving very fast and no matter how much we test it and how much we explore, it keeps surprising us with ways things can go wrong,” said Fulda.

Both Stroup and Fulda leave space for the possibilities that can develop from AI companions, but are cautious.

“I don’t want to necessarily label it good or bad as much as it is unknown,” said Stroup. “And there is the potential for this to be dangerous and unhealthy and scary, and there’s potential for it to be helpful.”

“There is so much potential for good in these technologies, but it is volatile and it is potentially destructive,” said Fulda. “We as a society need to keep working together to understand it and to apply it in ways that serve the common good.”

__________

For those struggling with thoughts of suicide, the National Suicide and Crisis Lifeline can now be reached by simply dialing 988 any time for free support. Resources are also available online at utahsuicideprevention.org.